In this article I explain the networking configuration from an HPE SimpliVity HCI solution from a physical and logical point of view.

An HPE SimpliVity appliance is build on the HPE DL380 or DL325 Gen10 platform (at the moment of publication of this article), which I will further reference as a SimpliVity host or node. Depending of the model are these appliances equipped with a combination of one or more LOM and/or PCIe based network cards with speeds varying from 1GbE up to 25GbE. Connectivity can be handled with RJ45 or SFP+ ports.

On every HPE SimpliVity node runs 1 OmniStack Virtual Controller, which is a virtual machine that is configured during the deployment of the SimpliVity cluster. You will see that this OVC virtual machine is connected with the 3 logical networks that we will discuss in this article.

Logical networks

An HPE OmniStack host requires three separate virtual networks for proper functionality. These 3 networks are configured during the deployment of the SimpliVity cluster with the installation and configuration of the ESXi hosts.

Management network

The Management network are used by the HPE OmniStack hosts to connect to the vCenter Server. By default it is also used by the guest virtual machines for all ingress and egress Ethernet traffic, but customers can also create their own virtual machine networks after the deployment of the SimpliVity cluster.

This network is normally enabled on the 1GbE interfaces when available, if not the Management network is enabled on the 10/25GbE interfaces. See below for a practical example.

Storage network

The Storage network is used by the HPE OmniStack host to connect to the HPE OmniStack storage layer using the NFS protocol via the HPE OmniStack Virtual Controllers (OVC).

It is a hard requirement that the storage network is always enabled on the 10/25GbE interfaces of the host. This is defined during the deployment of the SimpliVity nodes in the cluster.

Federation network

The Federation network is the preferred interface for virtual controller-to-virtual controller network communications within the cluster. Most of this traffic is data replication and backup traffic between the HPE OmniStack Virtual Controllers. Therefore this network is also configured on the vSwitch with the 10GbE or 25GbE interfaces. The Federation network is local, so it is not routed.

Federation communication across clusters will be routed through the Management network, which has a default gateway configured.

You configure the networking from the HPE OmniStack hosts during the deployment of the HPE Simplivity cluster(s) through the Deployment Manager to assign either separate NICs or a single NIC to handle HPE OmniStack network traffic. A complete overview of an HPE Simplivity deployment can be found in another article on this website.

Logical network to hardware mapping

These 3 logical networks will need to be mapped with the physical network interfaces through the vSwitch(es) of the ESXi hypervisor.

Important to know is that there are various hardware configuration out there, which has an impact on how the logical networks are mapped to the external network interfaces of the hardware node.

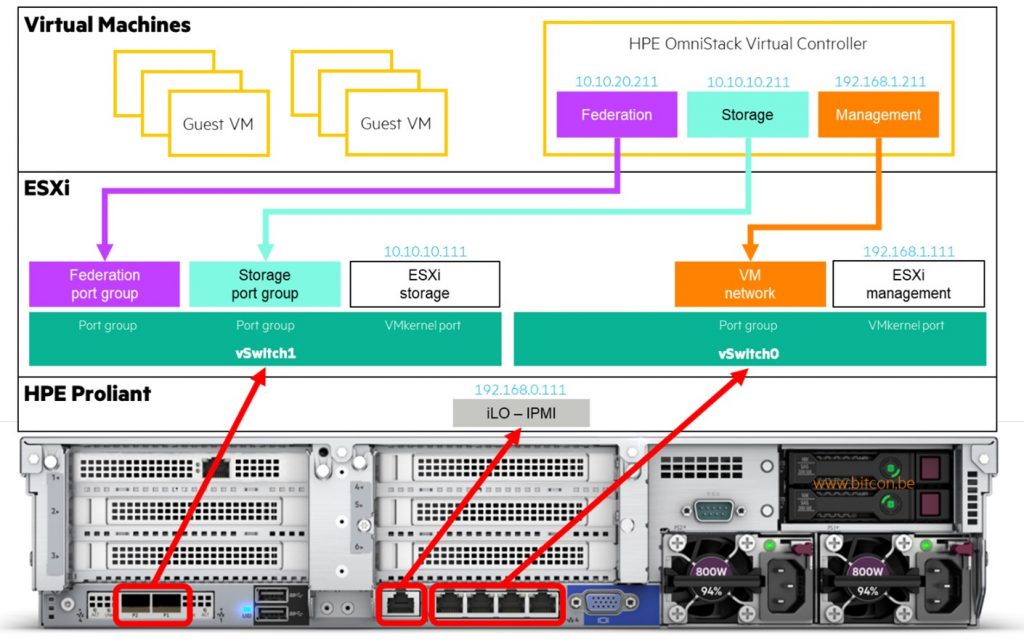

HPE SimpliVity 380 with accelerator card

The ‘traditional’ HPE Simplivity nodes with the Omnistack Accelerator Card is based on an HPE DL380 Gen10 server. This server is equipped with 4x1GbE interfaces on the motherboard in combination with a FlexLOM card with 2x10GbE or 2x25GbE interfaces.

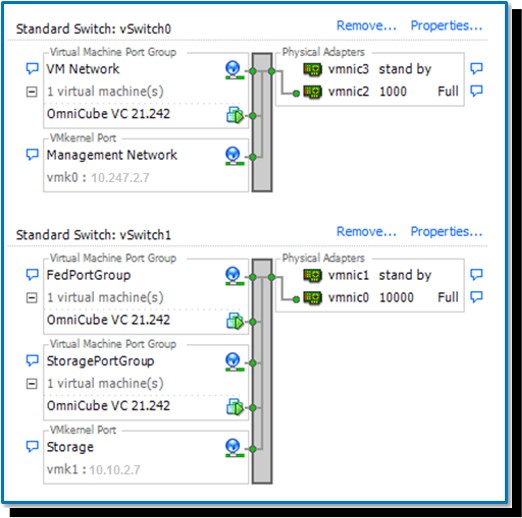

During the deployment of this model you will get by default 2 vSwitches vSwitch0 which is connected with the 4x1GbE interface, while vSwitch1 is connected with the 10/25GbE interfaces.

As shown in the picture the Management network is by default connected with vSwitch0 while the Storage and Federation networks are by default connected with the 10/25GbE interfaces of vSwitch1.

The IP addresses on the pictures are there just as a reference to show the 3 different IP subnets for the 3 logical networks across all the nodes in the cluster. It is mandatory that you use 3 different IP subnets.

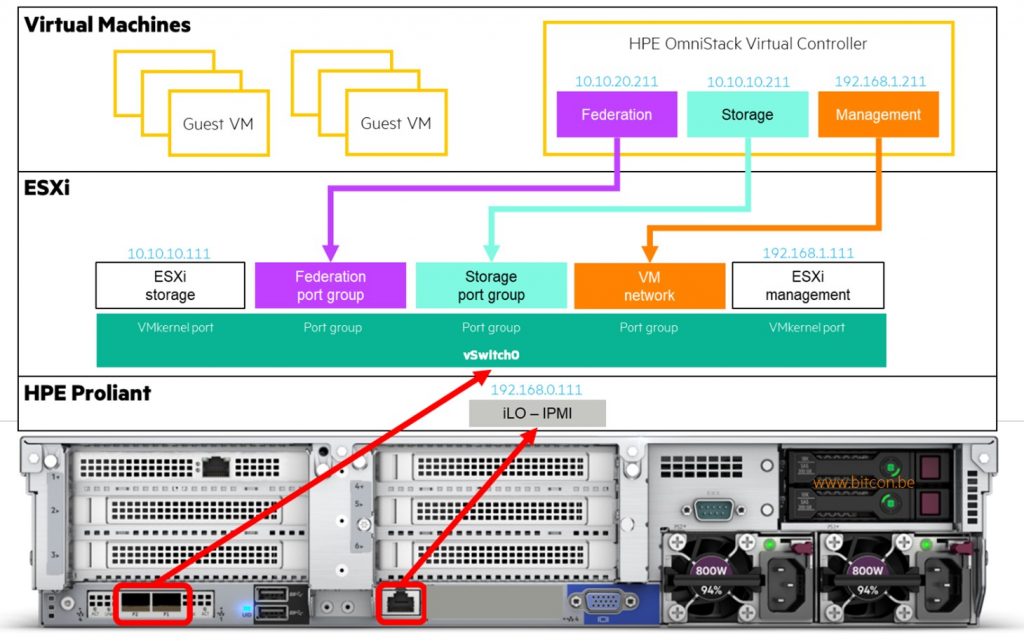

HPE SimpliVity 380G – 380H- 325

The ‘other’ HPE SimpliVity models in the portfolio are equipped with so-called NC motherboards, which means that they have by default no onboard networking. The result is that these HPE Simplivity nodes only have 2×10/25GbE interfaces by default, unless otherwise configured during the order process of the solution.

In the picture I show the ‘minimum’ configuration where 2 x 10/25GbE interfaces are installed. Of course there are other options available like 4x10GbE, or adding additional PCIe NIC’s in the node to get more bandwidth. The story stays the same, you will get 1 or more vSwitches in that case where the 3 logical networks will be connected to. All this is decided during the installation of the HPE SimpliVity cluster.

Jumbo Frames

Storage and Federation networks must be configured with a maximum transmission unit (MTU) of 9000 bytes to enable jumbo frames. A frame (or network packet) 9000 bytes or larger is called a jumbo frame and it increases performance in storage networking environments. The compute node storage network MTU should also be set to 9000.

For these networks there are some recommendations like enabling Jumbo Frames on the Storage and Federation network. This is done during the installation by setting the MTU size to 9000 bytes. The Management network will keep the default MTU size of 1500.

VLAN’s

VMware VLANs are not required, but are recommended. It is a common VMware best practice to assign the management network its own VLAN to separate the management traffic from the virtual machine data traffic. It is also common to create separate networks for vMotion as well as other networks, such as datastore heartbeats. Consider following these VMware recommendations in your HPE SimpliVity environment.

For security purposes, configure isolated VLANs for the HPE SimpliVity Federation before deploying the HPE OmniStack hosts. This protects federation interfaces and data from external discovery or malicious attacks.

NIC teaming

After you deploy HPE OmniStack hosts to a cluster, you can configure NIC teaming to increase network capacity for the virtual switch hosting the team and to provide passive failover if the hardware fails or it loses power. To use NIC teaming, you must uplink two or more adapters to a virtual switch.

For more details on configuring NIC teaming for a standard vSwitch, see the HPE OmniStack for vSphere Administration Guide. You can also find details on the VMware Knowledge Base site (kb.vmware.com) and search on NIC teaming in ESXi and ESX.

HPE recommends to use the Active/Standby setup for the Storage and Federation networks. This means both NICs are active on the vSwitch level, but on Storage and Federation network level to Active/Standby preferring separate NICs for each. For instance you set the Storage network active on vmnic4 and standby on vmnic5, and for the Federation network vmnic5 active and vmnic4 standby.

Of course it remains VMware networking so you can go for active/active, but HPE recommends the setup like mentioned above. Both vSphere Standard vSwitches and vSphere Distributed vSwitches are supported. Also SDN, for example NSX, is supported for the VM and management traffic.

For the latest recommendations and best practices I always recommend to check the latest version of the HPE Simplivity for vSphere Networking Best Practices document on the HPE Information Library website.

Physical network connectivity

When we take a look at the connectivity outside the HPE SimpliVity nodes towards the switches, there are some options available.

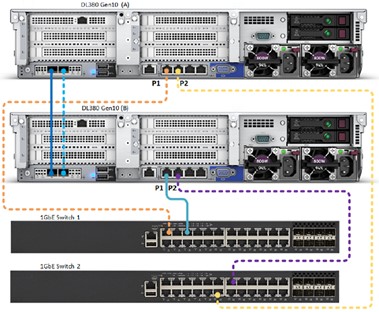

Direct Connect

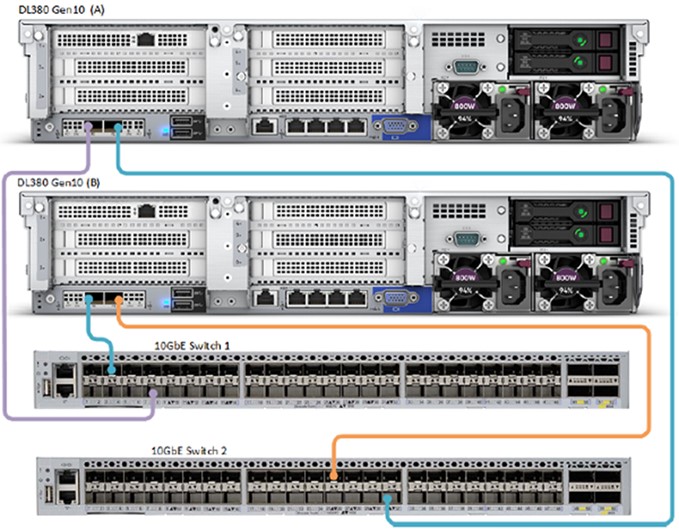

It is possible to deploy an HPE Simplivity 2+0 Federation with two HPE SimpliVity 380 servers using a direct-connected network configuration, as shown below. It uses the 10GbE connections for the HPE OmniStack Storage and Federation networks.

Redundant 1 GbE connections to 1 GbE switches provide the Management network. In this case the vMotion traffic is often enabled as well on the 10/25GbE interfaces for optimal performance.

Switch Connected cluster

The switch-connected network configuration for a Federation with two or more HPE SimpliVity 380 servers uses redundant connections to 10GbE switches for the HPE OmniStack Storage and Federation networks. It uses redundant 1/10GbE connections to 1/10GbE switches for the Management network.

This picture shows the layout when using HPE SimpliVity nodes with 1GbE interfaces, like explained above (for instance the 380 model with accelerator card).

Newer models like the 325, 380G and 380H models feature an NC motherboard which does not have the onboard 1GbE interfaces anymore. This means all logical networks needs to be enabled on the 2 or more 10/25GbE interfaces which are connected to the same switches, as shown in the picture below.

If you have any questions on HPE solutions like SimpliVity or any other, feel free to contact us for additional support experiences.

Be social and share!