You probably know that I am a big fan of Software Defined Storage. And I am not the only one apparently. I see more and more implementations in production in small, medium and even large-sized companies who adopt Software Defined Storage. All of the most important storage vendors have some kind of SDS (Software Defined Storage) solution and also other IT leaders like VMware and Nutanix integrate SDS functionality in their products as well.

For those who are not completely aware of what SDS is, a short explanation. The Software Defined strategy splits the Control layer from the Data layer.

Software Defined Servers are servers that are independent of the hardware underneath it. You will know this off course better as virtualization (VMware, Hyper-V, Linux KVM, etc).

Software Defined Networking achieves the same goal by putting all the networking configuration (port trunking, STP, QoS, VLANs, etc) in a central SDN controller which allows automatic configuration and flow control on top of SDN enabled switches, independent of the manufacturer (Cisco, HP, Juniper, etc).

With Software Defined Storage we have a similar approach. VMware VSAN puts the SDS functionality inside its kernel of ESXi, Microsoft calls it Windows Storage Spaces, others (HP, EMC, Mellanox, Nutanix, etc) implements SDS by creating a VSA (Virtual SAN Appliance) approach which is actually a VM running on top of a hypervisor.

At the end the result will be the same. We create a shared storage SAN on top of local storage independent of the hardware, disk type and/or connectivity underneath it.

What I like in particular of the HP StoreVirtual VSA is that it is 100% SDS since it can run on multiple hypervisors, has the most extended HCL (hardware compatibility list) and is scale-up (1TB up to 50TB per node) and scale-out (2 up to 32 nodes) storage at the same time.

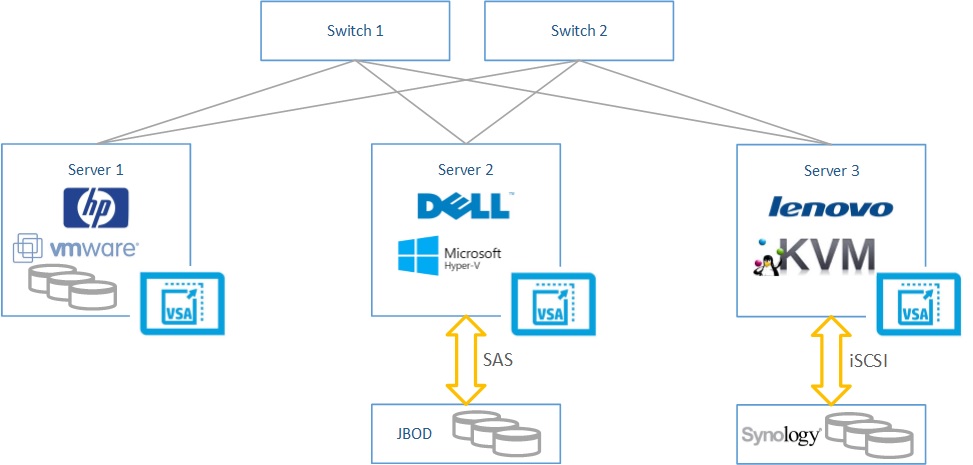

As an example of the strength and the capabilities of SDS I try to explain it with following picture:

The picture shows an example of the HP VSA deployed on multiple vendor hardware (you get a 1TB license for free when you buy a server no matter which brand equipped with an Intel 2600v3 processor), multiple hypervisors and even multiple types of storage (local SAS, JBODs and/or external iSCSI storage). The picture shows a fully working and supported configuration. Cool right?

However! This is the ideal world but there is something called the real world as well. Let me explain it with a simple example.

Imagine this case: server A and server B have 10Gb connectivity and server C has 100Mb connectivity. What will be the speed of your SAN? Right: it sucks.

Other scenario: server A and server B have SSD storage, server C has SATA drives. What will be the speed of your SAN? Yes you’re right again: it sucks.

Note: on top of the StoreVirtual VSA’s is Network RAID deployed. Find more information on this here.

As a practical example some time ago I assisted a customer who was trying that free version of the 1TB HP VSA license and reported that performance was bad…

I asked him if he followed all best practices (check out the Best Practices guides that exist for the HP VSA) like multiple iSCSI initiatiors in VMware, enabling 1 vmnic and disabling all the others per iSCSI adapter, enabling RoundRobin multipathing on your volumes and so on… It all looked good. And indeed performance was not like it should be.

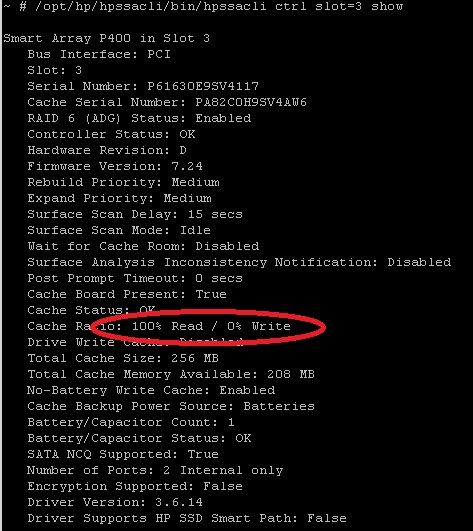

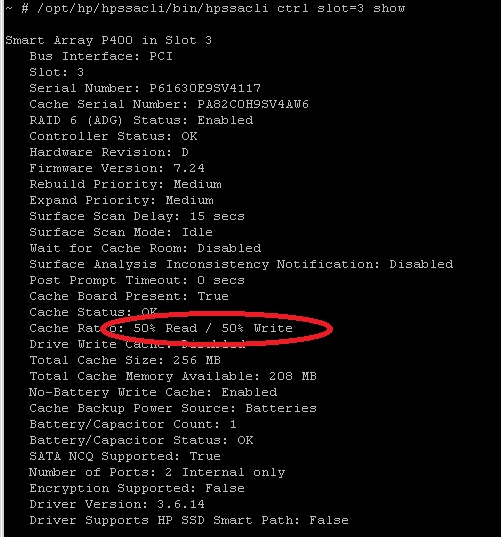

By digging a little further we found this: customer setup was a DL380 Gen8 and a DL380 G7 with 12 disks each and 10Gb connectivity. However the Gen8 server had a P420 RAID controller with 2GB of cache, the G7 server had a P410 RAID controller with 256MB of cache. It even gets worse when we check the RAID controller settings (in Windows it is fairly easy by checking the SSA utility – VMware is a bit more tricky since it needs to be done via CLI – see below). It seemed that the G7 RAID controller had 100% read cache and so 0% write cache. Every write was executed directly to disk. This customer decided to upgrade the cache of the P410 to 1GB (which is the maximum for this controller) and change the R/W cache ratio to 50/50.

Result: there was a remarkable performance increase.

Conclusion: the design and the components of the solution is very important to make this SDS based SAN a success. I hear often companies and people telling that hardware is more and more commodity and so not important in the Software Defined Datacenter, well I am not convinced at all.

I like the idea of VMware that states that, to enable VSAN, you need and SAS and SSD storage (HCL is quite restricted), just to be sure that they can guarantee performance. The HP VSA however is much more open and has lower requirements, however do not start complaining that your SAN is slow. Because you should understand this is not the fault of the VSA but from your hardware.

To wrap up find here some examples of not ideal configurations for VSA deployment:

/opt/hp/hpssacli/bin/hpssacli ctrl slot=3 show

ð Cache ratio is full read, no write

ð Can be changed by following command: /opt/hp/hpssacli/bin/hpssacli ctrl slot=3 modify cacheratio=50/50

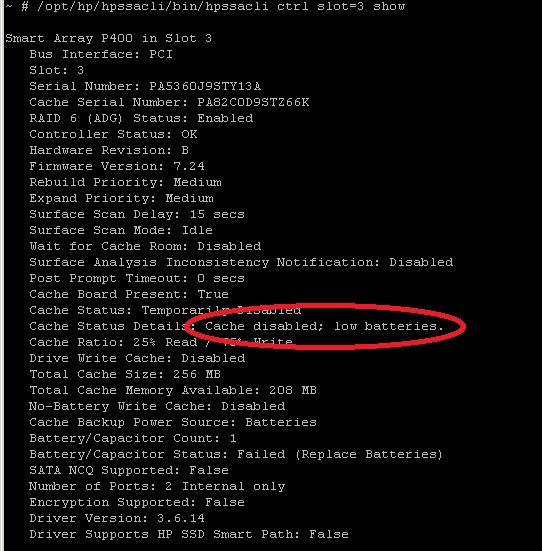

Other example: cache ratio is OK but the battery seems to be bad so no cache at all:

Some other interesting reading on VSA and VSAN and more:

Nigel Poulton: VSAN Is No Better Than a HW Array

http://blog.nigelpoulton.com/vsan-is-no-better-than-a-hw-array/

Chris Evans: VSAN, VSA or Dedicated Array?

http://blog.architecting.it/2014/10/21/vsan-vsa-or-dedicated-array/

As you can see there many opinions and visions on this topic. In this article I do not want to defend any type of implementation, vendor or vision, I just want to show how easy a software defined storage solution can become a success or a complete failure. Not more not less. And if people or companies tell you that hardware becomes commodity, well I am not convinced, even in a software defined datacenter.

Be social and share!